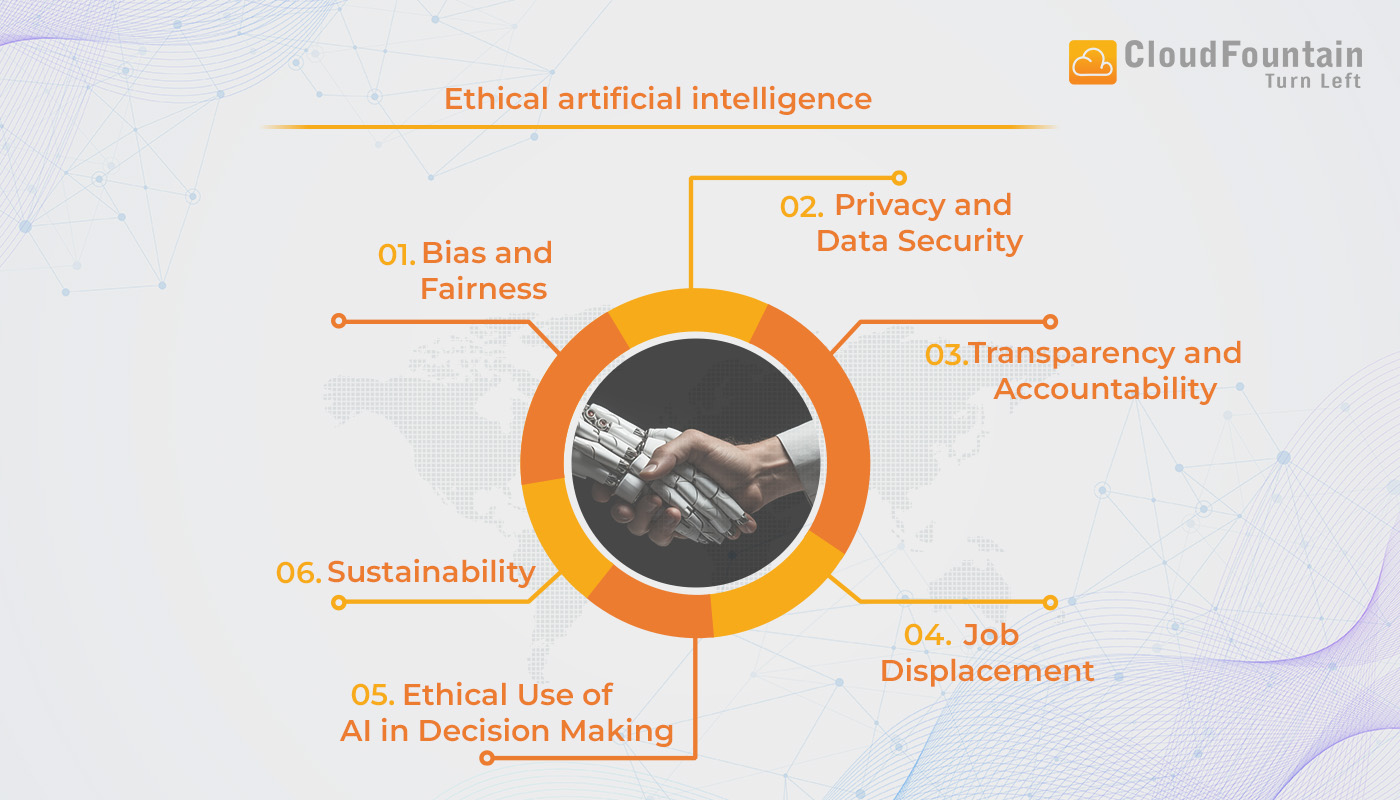

Ethical Artificial Intelligence: Navigating the Future Responsibly

As artificial intelligence (AI) continues to evolve and integrate into various aspects of daily life, the importance of ethical considerations becomes increasingly paramount. From autonomous vehicles to healthcare diagnostics, AI systems are making decisions that can significantly impact human lives. Therefore, ensuring these systems operate ethically is a critical concern for developers, policymakers, and society as a whole.

Understanding Ethical AI

Ethical AI refers to the development and deployment of artificial intelligence systems that are aligned with moral values and principles. This includes ensuring fairness, transparency, accountability, and privacy in AI operations. The goal is to create AI technologies that benefit society while minimizing harm.

Key Ethical Principles

- Fairness: AI systems should be designed to avoid bias and discrimination. This involves using diverse datasets and implementing algorithms that treat all individuals equitably.

- Transparency: The decision-making processes of AI should be understandable and accessible to users. This helps build trust and allows for informed decision-making by those affected by AI outcomes.

- Accountability: Developers and organizations must take responsibility for the actions of their AI systems. Clear guidelines should be established for addressing errors or unintended consequences.

- Privacy: Protecting user data is crucial in maintaining trust in AI technologies. Robust data protection measures must be implemented to safeguard personal information.

The Challenges of Implementing Ethical AI

The path to ethical AI is fraught with challenges. One major issue is the inherent bias present in training data, which can lead to unfair outcomes if not properly addressed. Additionally, the complexity of some AI models makes it difficult for even their creators to fully understand how decisions are made, complicating efforts towards transparency.

An ongoing challenge is balancing innovation with regulation. While regulations are necessary to ensure ethical standards are met, they must also allow room for technological advancement without stifling creativity or progress.

The Role of Stakeholders

A collaborative approach is essential in promoting ethical AI development. Policymakers need to establish clear guidelines that encourage responsible innovation while protecting public interests. Meanwhile, developers should prioritize ethical considerations from the outset of design processes.

The public also plays a crucial role by staying informed about how these technologies affect their lives and advocating for ethical practices in technology use.

The Road Ahead

The journey towards ethical artificial intelligence requires continuous effort and vigilance from all stakeholders involved. As technology advances at an unprecedented pace, so too must our commitment to ensuring these tools serve humanity positively rather than detrimentally.

By prioritizing ethics in artificial intelligence development today, we can help shape a future where technology enhances human well-being without compromising our values or rights.

8 Essential Tips for Promoting Ethical Practices in Artificial Intelligence

- Ensure transparency in AI systems to understand how decisions are made.

- Use diverse and inclusive datasets to avoid bias in AI algorithms.

- Regularly assess and mitigate potential risks associated with AI applications.

- Respect user privacy by implementing strong data protection measures.

- Promote accountability by clearly defining roles and responsibilities in AI development.

- Encourage continuous learning and improvement to enhance ethical practices in AI.

- Engage with stakeholders to gather feedback and address concerns about AI technologies.

- Comply with relevant laws, regulations, and ethical guidelines when deploying AI solutions.

Ensure transparency in AI systems to understand how decisions are made.

Transparency in AI systems is crucial for understanding how decisions are made, fostering trust and accountability. When AI processes are clear and understandable, users and stakeholders can gain insights into the mechanisms driving outcomes, allowing for informed decision-making and scrutiny. This transparency helps identify potential biases or errors within the system, enabling developers to address them effectively. By providing detailed explanations of algorithms and data sources, organizations can build confidence among users that AI technologies are being used responsibly and ethically. Ultimately, transparent AI systems empower individuals to engage with technology in a way that aligns with societal values and expectations.

Use diverse and inclusive datasets to avoid bias in AI algorithms.

In the development of ethical artificial intelligence, utilizing diverse and inclusive datasets is crucial to minimizing bias in AI algorithms. When datasets lack diversity, AI systems can inadvertently learn and perpetuate existing societal biases, leading to unfair or discriminatory outcomes. By incorporating a wide range of data that reflects different demographics, cultures, and perspectives, developers can create more balanced algorithms that are better equipped to make equitable decisions. This approach not only enhances the fairness and accuracy of AI systems but also helps build trust with users by demonstrating a commitment to inclusivity and social responsibility. Ultimately, diverse datasets are fundamental in ensuring that AI technologies serve all individuals fairly and justly.

Regularly assess and mitigate potential risks associated with AI applications.

Regularly assessing and mitigating potential risks associated with AI applications is crucial for ensuring their ethical deployment. As AI systems become more integrated into various sectors, they can introduce unforeseen challenges and vulnerabilities. By routinely evaluating these systems, developers and organizations can identify potential biases, security threats, or unintended consequences before they escalate. This proactive approach not only helps in maintaining the integrity and reliability of AI technologies but also builds trust among users and stakeholders. Implementing robust risk management strategies allows for the early detection of issues, enabling timely interventions that align AI applications with ethical standards and societal values.

Respect user privacy by implementing strong data protection measures.

Respecting user privacy is a fundamental aspect of ethical artificial intelligence, and implementing strong data protection measures is crucial in achieving this goal. AI systems often rely on vast amounts of data to function effectively, and this data can include sensitive personal information. Ensuring robust security protocols are in place helps protect this information from unauthorized access or breaches. Techniques such as encryption, anonymization, and secure storage are essential to safeguarding user data. Additionally, transparency about how data is collected, used, and stored builds trust with users and ensures compliance with privacy regulations. By prioritizing these measures, developers can create AI systems that honor user privacy while maintaining functionality and innovation.

Promote accountability by clearly defining roles and responsibilities in AI development.

In the realm of ethical artificial intelligence, promoting accountability is crucial to ensuring that AI systems are developed and deployed responsibly. A key strategy for achieving this is to clearly define roles and responsibilities throughout the AI development process. By establishing who is responsible for each aspect of an AI project—from data collection and algorithm design to testing and implementation—organizations can ensure that all team members understand their specific duties and the ethical implications of their work. This clarity not only helps prevent oversights and errors but also fosters a culture of responsibility, where developers are more likely to consider the broader impact of their decisions on society. Furthermore, having defined roles allows for better tracking of accountability, making it easier to address any issues that arise during or after deployment.

Encourage continuous learning and improvement to enhance ethical practices in AI.

Continuous learning and improvement are vital components in enhancing ethical practices in artificial intelligence. As AI technologies rapidly evolve, it is crucial for developers, organizations, and policymakers to stay informed about the latest advancements and ethical challenges. By fostering a culture of ongoing education and adaptation, stakeholders can better identify potential biases, address emerging ethical concerns, and implement best practices. This approach not only helps in refining AI systems to be more fair and transparent but also ensures that they remain aligned with societal values. Encouraging continuous learning enables a proactive stance on ethics, allowing for the development of AI technologies that are both innovative and responsible.

Engage with stakeholders to gather feedback and address concerns about AI technologies.

Engaging with stakeholders is a crucial step in developing ethical artificial intelligence technologies. By actively seeking feedback from a diverse group of stakeholders, including users, policymakers, industry experts, and affected communities, developers can gain valuable insights into the potential impacts and concerns surrounding AI systems. This collaborative approach helps identify and address ethical issues early in the development process, ensuring that the technology aligns with societal values and needs. By fostering open dialogue and incorporating diverse perspectives, organizations can build trust and create AI solutions that are not only innovative but also responsible and equitable.

Comply with relevant laws, regulations, and ethical guidelines when deploying AI solutions.

Ensuring compliance with relevant laws, regulations, and ethical guidelines is a fundamental aspect of deploying AI solutions responsibly. This involves staying informed about the legal frameworks and industry standards that govern AI technologies in different sectors. By adhering to these regulations, organizations can mitigate risks associated with data privacy breaches, discrimination, and other ethical concerns. Compliance not only protects companies from legal repercussions but also fosters trust among users and stakeholders by demonstrating a commitment to operating within established ethical boundaries. As AI continues to evolve, maintaining alignment with updated laws and guidelines will be crucial in promoting the responsible and beneficial use of these technologies across various applications.